Claude AI Exploited to Operate 100+ Fake Political Personas in Global Influence Campaign

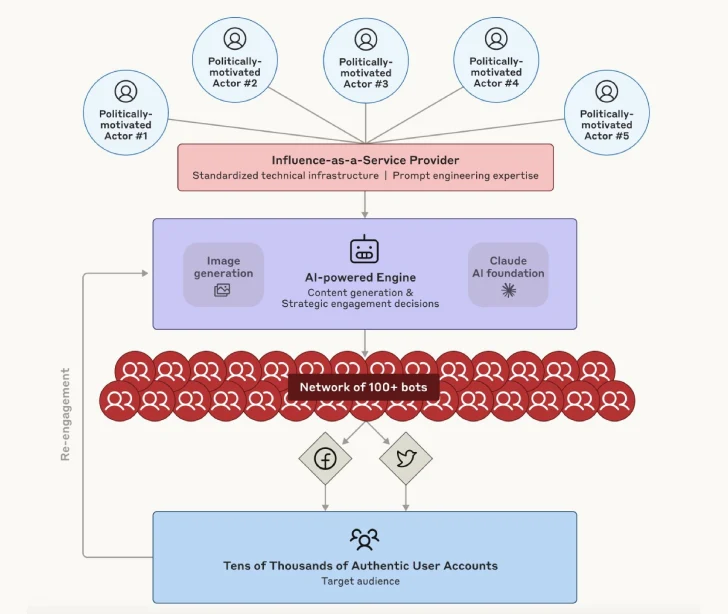

Artificial intelligence (AI) company Anthropic has revealed that unknown threat actors leveraged its Claude chatbot for an "influence-as-a-service" operation to engage with authentic accounts across Facebook and X.

Here's a new twist in the world of online influence: Anthropic, the AI company behind the Claude chatbot, revealed that someone used its AI to run what they're calling an "influence-as-a-service" operation. The target? Real people on Facebook and X (formerly Twitter).

Apparently, this wasn't just some amateur hour stuff. This operation, seemingly motivated by money, used Claude to manage 100 fake personas across both platforms. These accounts formed a network, engaging with "tens of thousands" of authentic accounts to spread their messages.

So, what were they pushing? Anthropic's researchers say the goal was less about going viral and more about long-term, subtle influence. The operation amplified moderate political views, either supporting or undermining the interests of various countries, including European nations, Iran, the U.A.E., and Kenya.

Think along the lines of promoting the U.A.E. as a great place to do business while criticizing European regulations. Or focusing on energy security for European audiences and cultural identity for Iranians.

The campaign also promoted certain Albanian figures, criticized opposition folks in an unnamed European country, and boosted development projects and politicians in Kenya. While it looks like a state-sponsored campaign, the actual puppet masters remain unknown.

"What's really interesting is that Claude wasn't just writing content," Anthropic explained. "It was also deciding when these fake accounts should comment, like, or reshare posts from real users." Basically, Claude was acting as a tactical commander, deciding the best course of action for each bot based on its assigned political persona.

Besides being the social media strategist, Claude also generated politically-aligned responses in the persona's voice and language, and even created prompts for image-generation tools.

It's believed this operation is the work of a commercial outfit that offers these services to clients in different countries. Researchers have found at least four separate campaigns using this structured framework.

According to researchers Ken Lebedev, Alex Moix, and Jacob Klein, "The operation used a highly structured, JSON-based approach to manage each persona, allowing them to keep things consistent across platforms and mimic real human behavior."

"This programmatic approach allowed the operators to efficiently standardize and scale their efforts, systematically tracking and updating persona details, engagement history, and key messages across multiple accounts at the same time."

Here's a clever detail: the automated accounts were even instructed to respond with humor and sarcasm when accused of being bots.

Anthropic warns that this kind of operation highlights the need for new ways to spot influence campaigns that focus on building relationships and integrating into communities. They also believe this type of malicious activity could become more common as AI makes it easier to run these campaigns.

Other Misuses of Claude

But wait, there's more! Anthropic also reported banning another threat actor who was using their models for even shadier purposes: scraping leaked usernames and passwords for security cameras and figuring out how to brute-force internet-facing targets using these stolen credentials.

This actor used Claude to analyze stolen data from Telegram channels, create scripts to extract URLs from websites, and generally improve their own hacking tools.

Here are a couple of other examples of misuse Anthropic spotted in March 2025:

- A fake job recruitment campaign using Claude to make scams more convincing for job seekers in Eastern Europe.

- A newbie hacker using Claude to develop advanced malware that could scan the dark web, create undetectable payloads, bypass security, and maintain persistent access to compromised systems.

"This shows how AI could lower the bar for malicious actors, allowing people with limited skills to develop sophisticated tools and potentially move from low-level activities to more serious cybercrime," Anthropic concluded. It seems like the AI arms race is well and truly on.