Citrix Under Fire Hackers Race to Exploit Flaws Using AI-Powered Tool

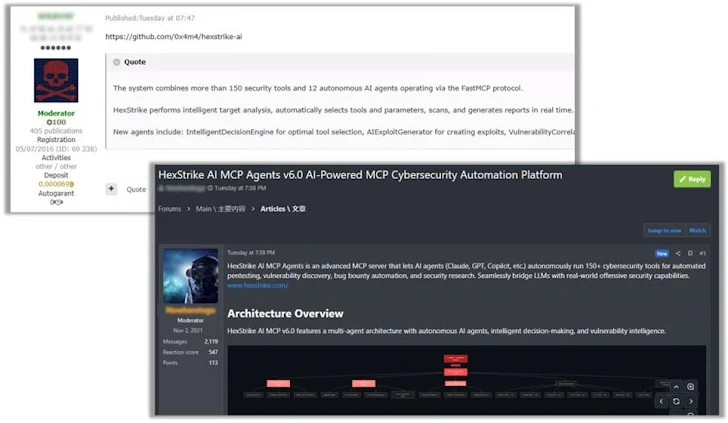

Cybercriminals are wasting no time exploiting the latest Citrix vulnerabilities. Reports indicate attackers are already attempting to weaponize HexStrike AI, a recently unveiled offensive security tool, to target these newly disclosed weaknesses.

It looks like cybercriminals are already trying to twist a new AI-powered security tool, called HexStrike AI, to their advantage. They're reportedly using it to exploit recently revealed security holes.

So, what is HexStrike AI? According to its website, it's an AI platform designed to automate the process of finding vulnerabilities. The idea is to help security teams with "red teaming," bug bounty programs, and CTF (capture the flag) competitions.

The platform, which is open-source and hosted on GitHub, integrates with a massive range of security tools – over 150! It aims to streamline everything from network reconnaissance to web application security testing and even cloud security. Plus, it boasts specialized AI agents tailored for things like finding vulnerabilities, developing exploits, and figuring out attack strategies.

But here's the catch. A recent report from Check Point suggests that bad actors are actively trying to weaponize HexStrike AI to exploit vulnerabilities. That's right, a tool meant to *strengthen* security is potentially being used to *break* it.

"This marks a pivotal moment," said Check Point. "A tool designed to strengthen defenses has been claimed to be rapidly repurposed into an engine for exploitation...driving real-world attacks."

Apparently, discussions on darknet forums indicate that some cybercriminals are already claiming success in using HexStrike AI to exploit three security flaws disclosed by Citrix last week. Worse, they're even identifying potentially vulnerable NetScaler instances and selling access to them to other criminals!

The implications are pretty serious. Check Point warns that this kind of malicious use shrinks the window of opportunity to patch systems after a vulnerability is disclosed. It also automates and speeds up the entire exploitation process.

According to Check Point, this automation also removes some of the manual effort required for exploits, and allows for attempts to be retried until a successful outcome is achieved. This ultimately increases the "overall exploitation yield."

Their recommendation? "The immediate priority is clear: patch and harden affected systems." They also warn that "Hexstrike AI represents a broader paradigm shift, where AI orchestration will increasingly be used to weaponize vulnerabilities quickly and at scale."

And it's not just HexStrike AI. Researchers from Alias Robotics and Oracle Corporation recently published a study highlighting the dangers of AI-powered cybersecurity agents like PentestGPT. Their concern? These tools are vulnerable to "prompt injection" attacks, where hidden instructions can effectively turn them *into* cyber weapons.

Researchers Víctor Mayoral-Vilches and Per Mannermaa Rynning said that "the hunter becomes the hunted, the security tool becomes an attack vector, and what started as a penetration test ends with the attacker gaining shell access to the tester's infrastructure."

Their conclusion is blunt: "Current LLM-based security agents are fundamentally unsafe for deployment in adversarial environments without comprehensive defensive measures."